A couple of weeks ago, I spoke on a panel at the IoT Tech Expo being held in London. The topic of the panel was “IoT and AI data analytics for intelligent decision making.” Combining two topics that are very hyped at the moment guaranteed a good attendance, but the question at the centre of the discussion was meaningful. Can Artificial Intelligence help improve decision making when faced with the morass of data produced by Internet of Things systems. Perhaps a lofty goal, but one that led to an interesting discussion with a particularly engaged audience.

The barrier to entry to using Artificial Intelligence is lower than ever

The debate kicked off on how accessible AI is to a company building out an IoT capability. Much is said in both the tech and mainstream press about the war for AI talent. Column inches are devoted to the ridiculous six figure salaries allegedly being paid to top AI developers. Given this rather excitable coverage, it is easy to jump to the conclusion that AI capability is the sole preserve of the Googles and Facebooks who are hoovering up the worlds’ talent.

The reality however is that it has never been easier to deploy machine learning and artificial intelligence solutions. The leading cloud platform providers, Google Cloud, Amazon AWS and Microsoft Azure all have comprehensive machine learning and AI capabilities. All key algorithms are well supported, including the main categories of machine learning (classification, regression, clustering, recommendation, prediction), visualisation, text and speech recognition and processing, chatbots, translation, image analysis and video analysis. These services mean that it is therefore no longer necessary to have a PhD in AI in order to incorporate neural networks or deep learning algorithms into whatever you are building. An appreciation of how the algorithms work remains essential, though again, this is easy enough too achieve through online learning platforms such as Udacity, Coursera and Udemy.

How is this relevant to the Internet of Things?

So why have both Artificial Intelligence and the Internet of things come of age at the same time? It is worth considering the history of the technology building blocks made over the decades. Although AI seems to be a very recent tech development, much of the theoretical groundwork to artificial intelligence is not new. The basis of regression analysis, one of the key mathematical tools was invented 200 years ago, while the first neural networks were developed 50 years ago. What has changed recently is that advances in hardware, communications capability, data processing and storage have enabled viable, cost-effective applications.

The key flexion points were the launch of the iPhone and Amazon’s opening up of its IT infrastructure as its AWS cloud service. The iPhone not only changed the way people consume and generate data forever (think of social media, wearables, navigation apps, online video), but the technology that made the smartphone possible also allowed ‘things’ to be connected and therefore gave birth to the Internet of Things. Similarly, when Amazon kicked off the Cloud services industry, all of a sudden companies, no matter how big or small, had access to virtually unlimited computing power, data storage and handling capability.

So where does this leave us today? Well, the Internet of Things is fairly well established. We now have the low-power, cheap devices and sensors connected to the Internet and the connectivity infrastructure to collate and process that data. These applications cut across smart homes, wearable devices, smart cities, transportation and industry. However, at the moment, the ability to communicate, collate and store data exceeds many companies’ ability to apply systematic intelligence or learning based on the data. Yes, there may be some analytics and visualisation, but it is true to say that the data being generated by connected IoT devices is in many cases, companies’ are often overwhelmed by the data.

This is where AI, or more accurately, machine learning comes in. Take so so much vaunted Industrial IoT, or Industry 4.0, the much heralded new industrial revolution. Most deployments today are limited to monitoring and alerting. Similarly, most smart home applications are actually connected homes, the only smart part of the system being the user who can interact remotely with his remote alarm system or smart thermostat. Put simply, collating and storing data from sensors is easy as data and cloud technologies are currently running ahead or companies’ AI and ML capability. Putting that data to good use is less more complex. It is however not outside the grasp of a moderately capable software organisation. Machine learning and other data analysis toolsets gives companies the capability to transform data into something useful.

On Cloud or at the Edge?

One of the supposed trends is a shift of AI computation from the cloud to the edge, with many analysts predicting a reversal of the trend that has seen most data processing take place centrally in the cloud. This is spurred by the need to take decisions in real-time, closer to where the action is, and not dependent on an unreliable link back to the cloud. The advent of processors dedicated to AI number-crunching is making this possible. Qualcomm, Apple, Amazon and Google are all apparently developing their own hardware, much of this aimed at carrying out applications such as voice recognition and facial recognition on devices. In many ways, this makes sense. Where the amount of data to be analysed is relatively large, such as a video in an facial recognition application, and where latency is important, then it is clearly beneficial to carry out this processing on your smartphone or smart speaker. Nevertheless, their remain countless applications where the scale efficiency of the cloud, and the ability to process aggregate information from all devices will mean that centralised processing in the cloud will remain the preferred option. Additionally, IoT devices are often designed to be as cheap as possible, and are in many cases battery-powered. In these applications, the devices will be capable of sending data to the cloud, but limitations on their computational power or battery life will make it prohibitive to carry out advanced AI or ML processes on board.

Amazon may be developing AI chips for Alexa

The Information has a report this morning that Amazon is working on building AI chips for the Echo, which would allow Alexa to more quickly parse information and get those answers. Getting those answers much more quickly to the user, even by a few seconds, might seem like a move that’s not wildly important.

AI is making a difference in ways that are going unnoticed

So does this mean that AI is not delivering any meaningful value beyond its use by the tech giants? One of the criticisms levelled at AI evangelists is that much is promised, and little is delivered. Given the amount of hype, there is some truth in this, and the technology is never going to justify either the hype or possibly even the investment dollars going into this space. This criticism however misses the point. Many companies are putting machine learning (and occasionally deep learning) to good use, but in a way that is not particularly visible to its customers. It may make use of AI algorithms in its cyber-security defences; the demand and supply with its supply chain may be optimised by machine learning; its maintenance operations may use predictive analytics to reduce its cost of routine maintenance; sentiment analysis may be deployed to monitor the performance of its customer support operation; ‘digital twins’ of physical machines can be used to monitor and optimise performance.

None of these applications are going to make the headlines. The benefits are largely below the radar, aimed at either harnessing data to improve the effectiveness of existing operations, or to reduce their cost, in order to allow an operation to scale more cheaply. Taken together, however, they can provide the operational efficiency gains that can make all the difference between success and failure. The fact that these applications are not particularly glamorous means that these advances are taking place away from the spotlight.

The fear of AI – bias

The discussion got most animated when the topic of AI bias was raised by a member of the audience. This is one of the main concerns of ethicists and politicians, who are concerned that the bias of the developer or a community can be reflected and indeed propagated by an AI system. One example that hit the headlines a couple of years ago was a Microsoft chatbot which interacted with Twitter users and then picked up neo-Nazi slogans and other foul beliefs. Although this was largely an academic experiment that took an unexpected direction, it raised serious concerns. Similarly, recently a House of Lords report recommended that AI teams reflect the diversity of society in order not to cement bias and privilege.

I am more relaxed. Where the AI or machine learning is used to process quantitative data – i.e. numbers, bias is a purely a technical concern. In other words, it simply can result in a sub-optimal solution, though this it may well be an outcome that is good enough for a company or its customers.

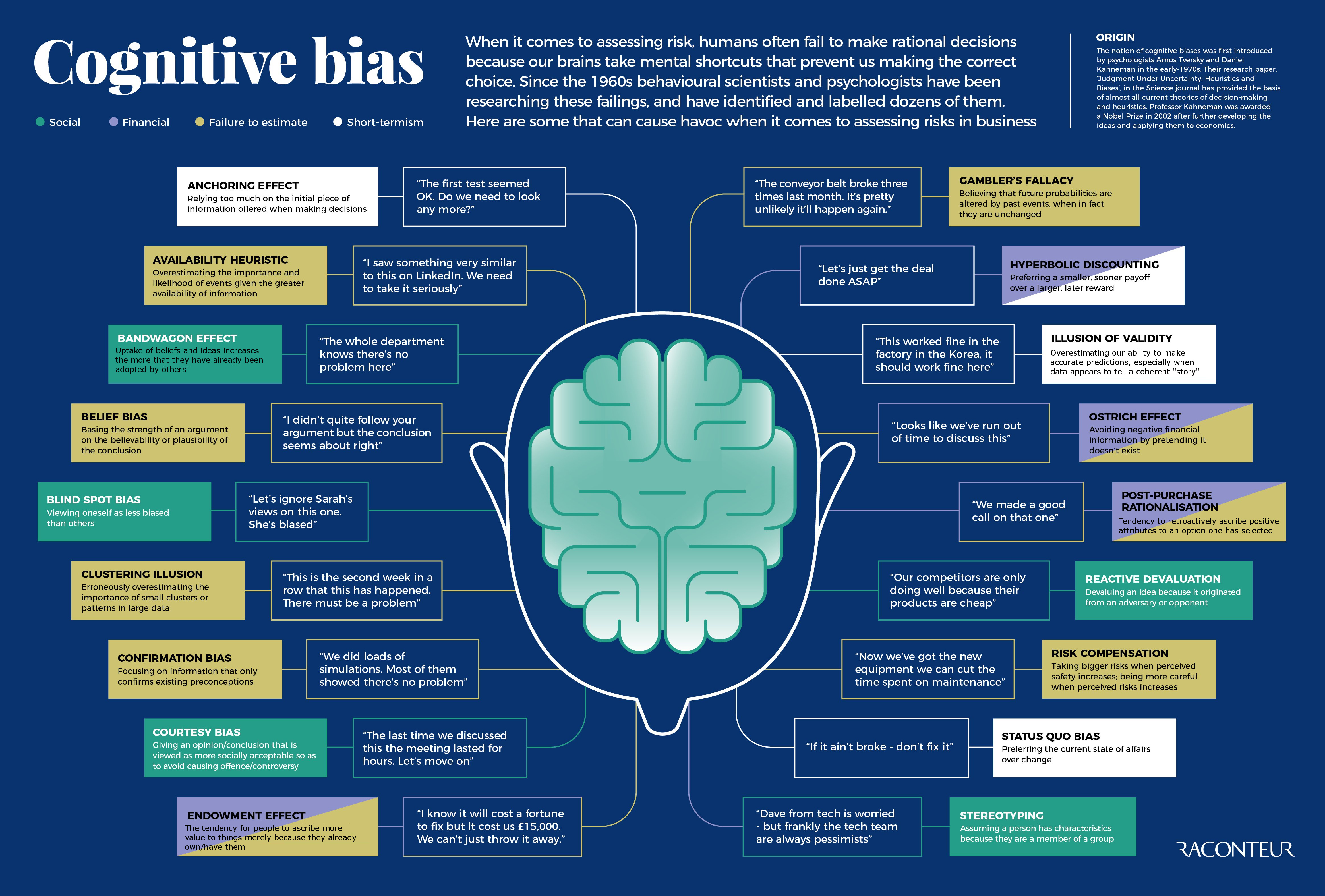

The social concerns are strongest where natural language processing or other means of interaction with people are involved, such as facial recognition. Here, of course, there are risks. However, when we compare with human decision-making, I very much suspect we will find that artificial intelligence will be found to be a fairer arbiter and more rational decision maker than most humans. Even ignoring prejudices, the ability to make rational decisions is inhibited by a series of mental short-cuts that allows us to make rapid decisions in a world of immeasurable complexity. These shortcuts have the side effect of introducing errors into decision-making, meaning that humans are pretty poor decision-makers. Much is said about having humans to supervise AI systems. What about having AI systems supervise humans?

Conclusion

The discussion clearly showed that amongst a diverse range of industries, from finance to telecoms, from manufacturing to transformation, companies are now grappling on how best to adopt AI in their operations. The consensus is that this is not merely a fad that will be forgotten about in a few years. The transformative potential is clear, yet the challenge about how and where to implement remains daunting.